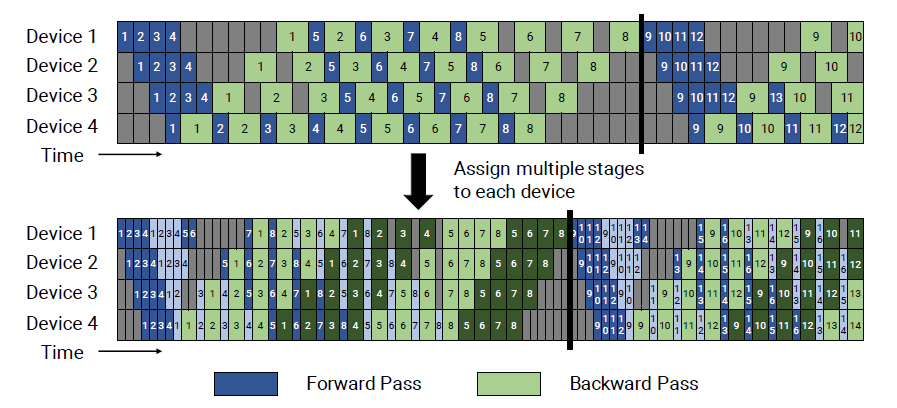

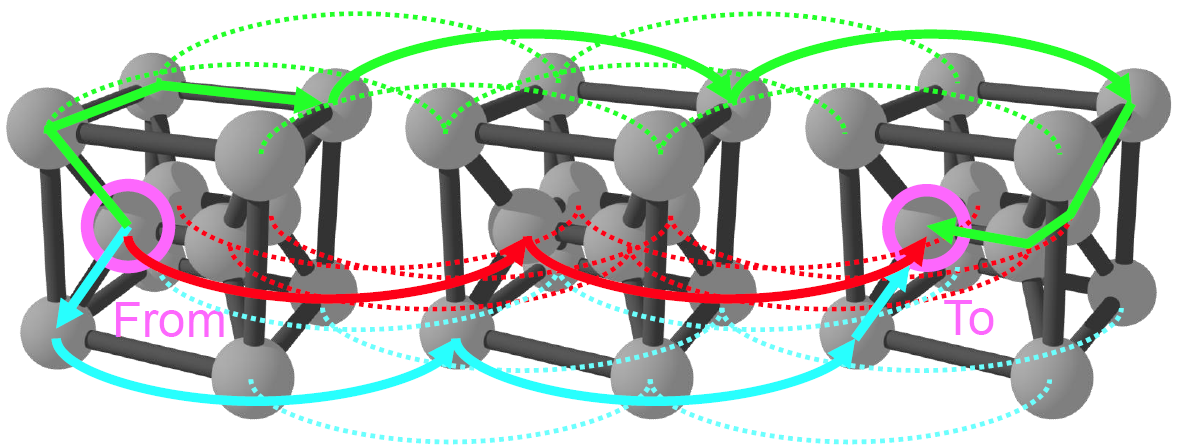

3D并行(3D Parallelism)

参考文献: Narayanan D, Shoeybi M, Casper J, et al. Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM: SC' 21, November 14-19

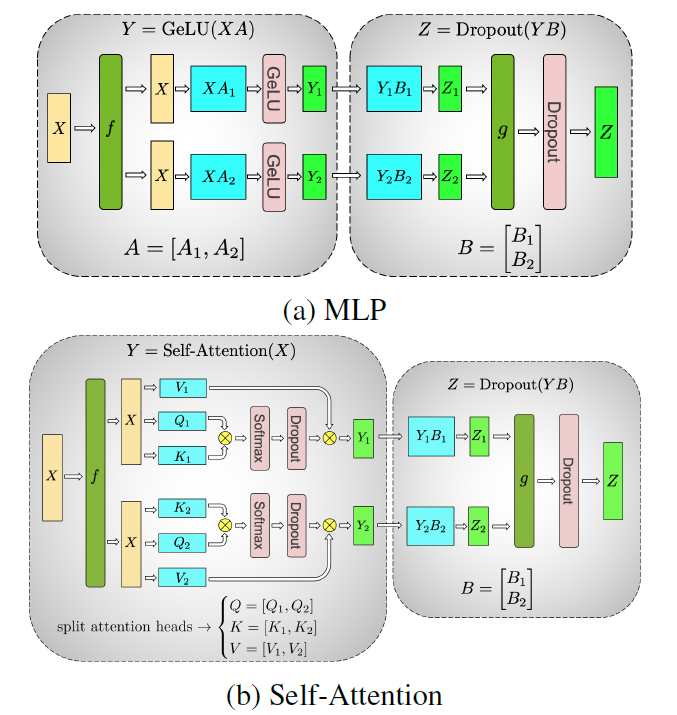

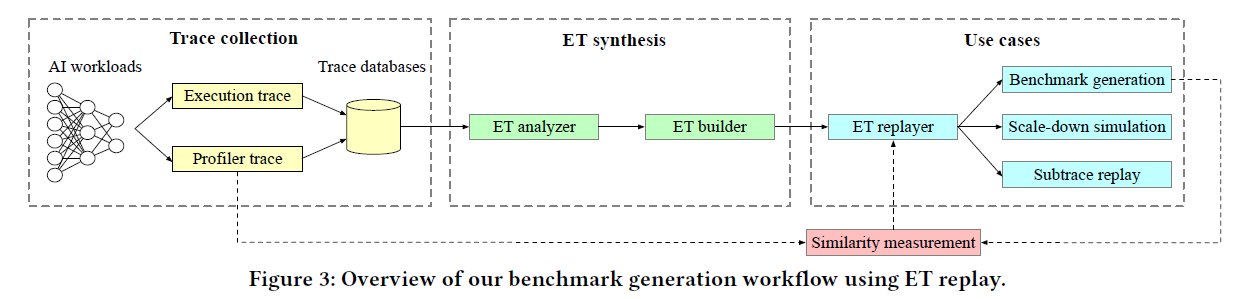

张量并行(Tensor Parallelism)

参考文献: Shoeybi M, Patwary M, Puri R, et al. Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism[J]. ArXiv, 2020 张量并行(